Key Takeaways

While working on my bachelor thesis I learnt a great many things, including the following; The PyTorch deep learning framework for building models, processing image data, working on GPUs and loading data with multithreading. Advanced image data processing methods. Utilizing transfer learning and fine-tuning models to perform well on data from a different domain. Explaining and visualizing the decision making of a convolutional model through class activation mapping (CAM). Reading and applying knowledge from research papers.

Introduction

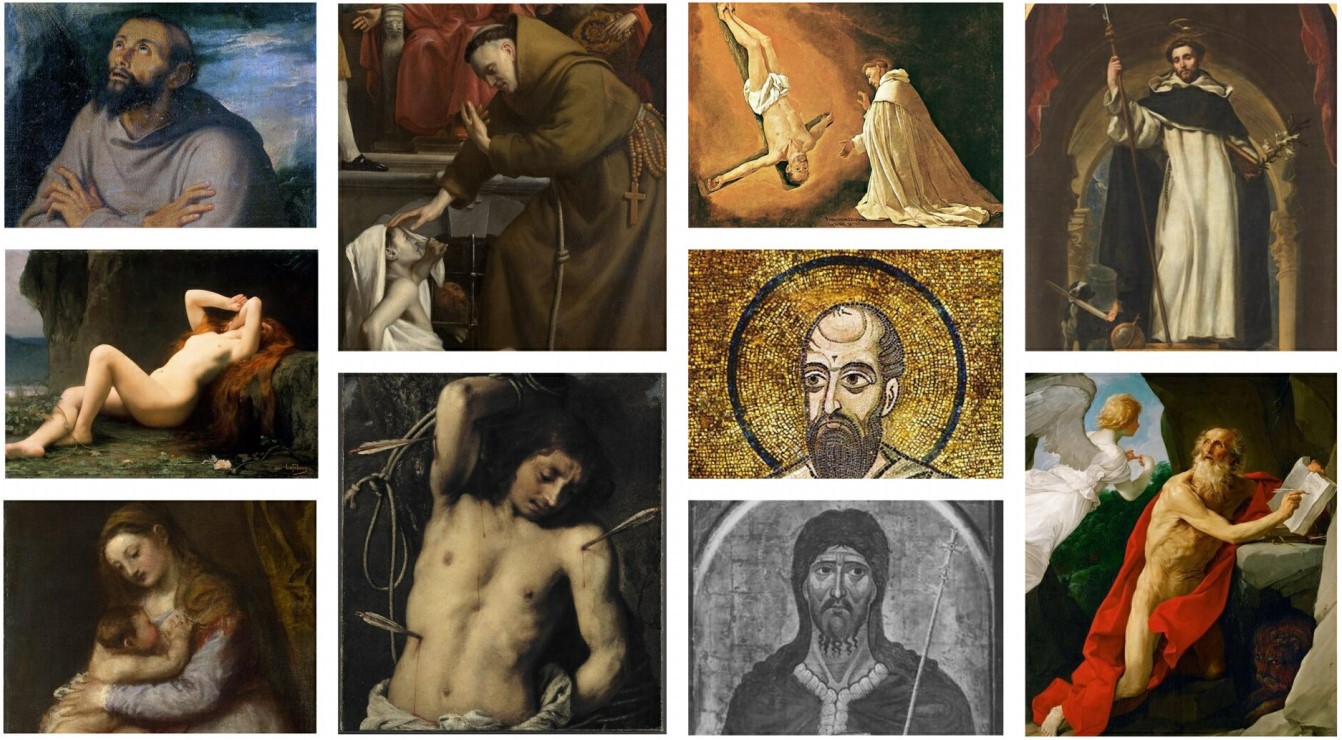

My thesis involved investigating and building a simpler convolutional model for recognising Christian saints in renaissance artworks by the most distinct visual features from their respective iconography. Such features could be the arrows piercing Saint Sebastian, the ointment jar of Mary Magdalene or the lion accompanying Saint Jerome. This was to find a baseline for the aforementioned task and investigate how complex/deep such a model needed to be to base its decision on the iconography of the saint.

A link to my thesis is at the top of this page.

The model

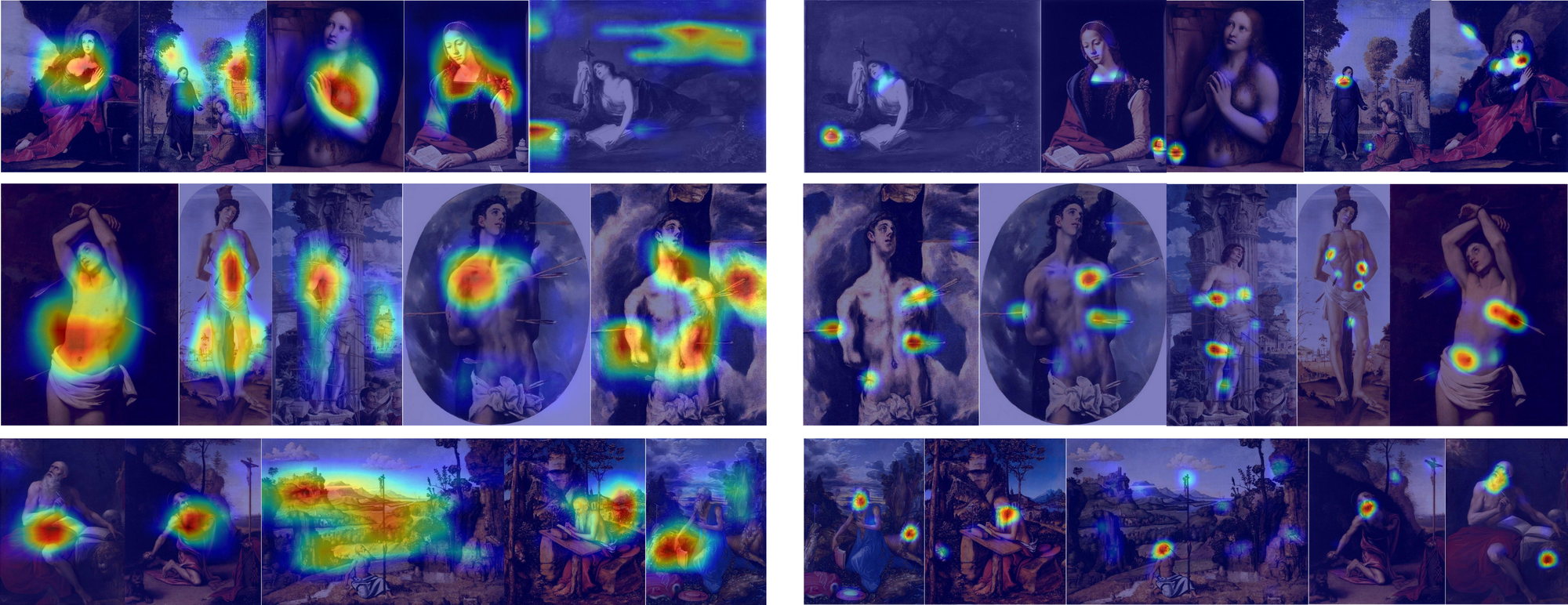

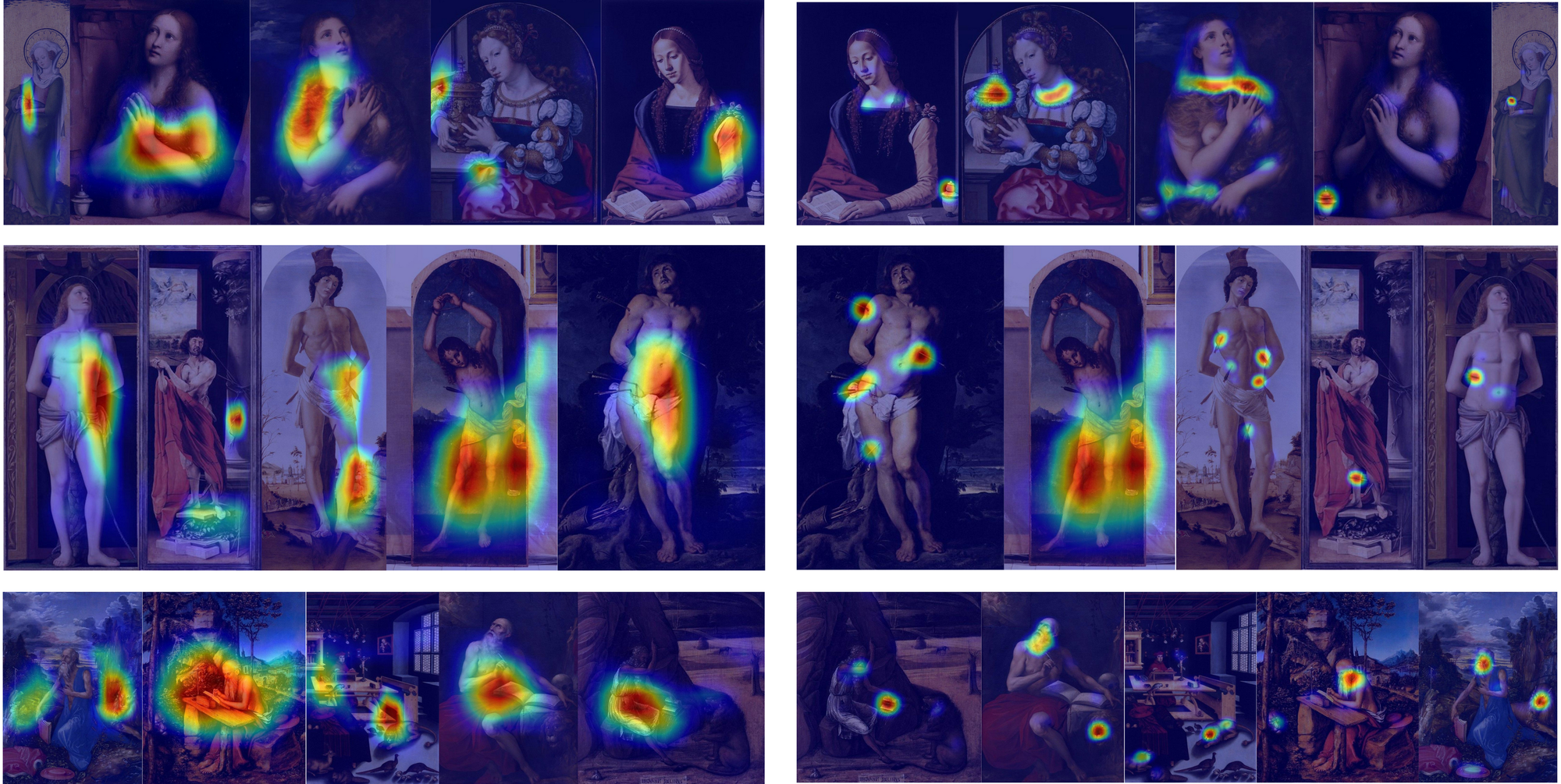

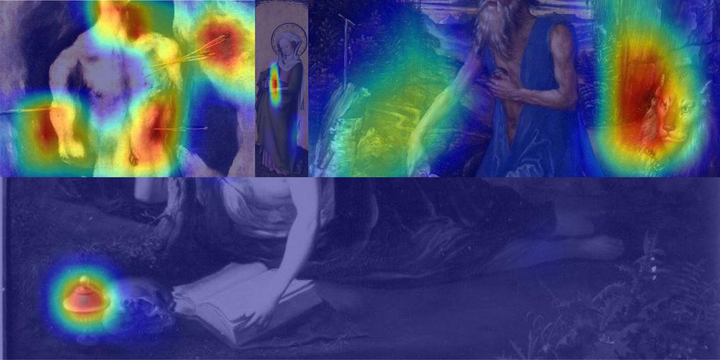

The model chosen was a VGG-16, pretrained on the ImageNet data set. I fine-tuned it on the ArtDL data set (data set of the Christian saints) with 3 different training strategies of varying data pre-processing techniques and different choices of frozen layers. In addition I modified the model to be fully convolutional and generate class activation mappings showing heatmaps of the features used for inference. The most successful strategy involved freezing the input layer, the first convolutional block and half of the second, together with image normalization, random erasing augmentation and expansion of the data to minimize class imbalance.

Results

The final macro averages of the model results were:

| Precision | Recall | F1 |

|---|---|---|

| 0.61 | 0.25 | 0.31 |

The precision was rather high for a baseline model, but the low recall and further investigation showed bias towards Virgin Mary, the class with significantly more data than the rest. This was mainly due to the relatively shallow depth of the model compared to the state-of-art, a ResNet50. The investigation showed that a model of only 16 layers of depth isn’t deep enough for more abstract features such as the entirety of Mary Magdalene’s ointment jar. Further investigation showed that in many cases the baseline model extracted similar features or parts of more abstract features used by the state-of-art model. Example is nudity textures together with arrow lines, versus the specific arrow penetration areas for Saint Sebastian, or the texture of Mary Magdalene’s curly hair and lines of the ointment jar, versus specifically the ointment jar.